之前的笔记讲的过程中一般都是使用queue队列来进行线程或者进程间进行通信或者资源的调配,但是我们大部分多进程和多线程的场景还是需要用到进程锁和线程锁,直接上例子,非百度copy的例子

多进程代码实例:

#!/usr/bin/python3

#coding:utf-8

from multiprocessing import Pool,Manager,Lock

import pymysql

import time

import csv

lock = Lock()

def connectMysql():

conn = False

try:

conn = pymysql.Connect(host='127.0.0.1', port=3306, user='root', passwd='root', db='sulao', charset='utf8')

except:

print("Connect MySQL failed !")

conn = False

return conn

def getList(sql):

cur = connectMysql().cursor()

cur.execute(sql)

dat = cur.fetchall()

return dat

def writeCsv(q):

while q.qsize() > 0:

d = q.get()

try:

lock.acquire()

with open('article.csv', 'a', newline='') as f:

cvwrite = csv.writer(f)

cvwrite.writerow((d[0], d[1], d[2], d[3]))

except:

print("Write failed !")

finally:

lock.release()

if __name__ == "__main__":

start_time = time.time()

sql = '''SELECT log_ID,log_Title,log_Intro,log_PostTime FROM zbp_post WHERE log_Status=0 ORDER BY log_ID ASC'''

data = getList(sql)

print("Total %s" % len(data))

with open('article.csv', 'w', newline='') as f:

cvwrite = csv.writer(f)

cvwrite.writerow(['id','标题','简介','发布'])

q = Manager().Queue()

for d in data:

q.put(d)

p = Pool(4)

for i in range(4):

p.apply_async(writeCsv, args=(q, ))

p.close()

p.join()

end_time = time.time()

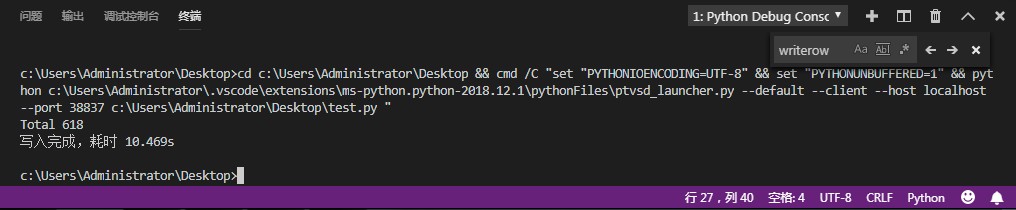

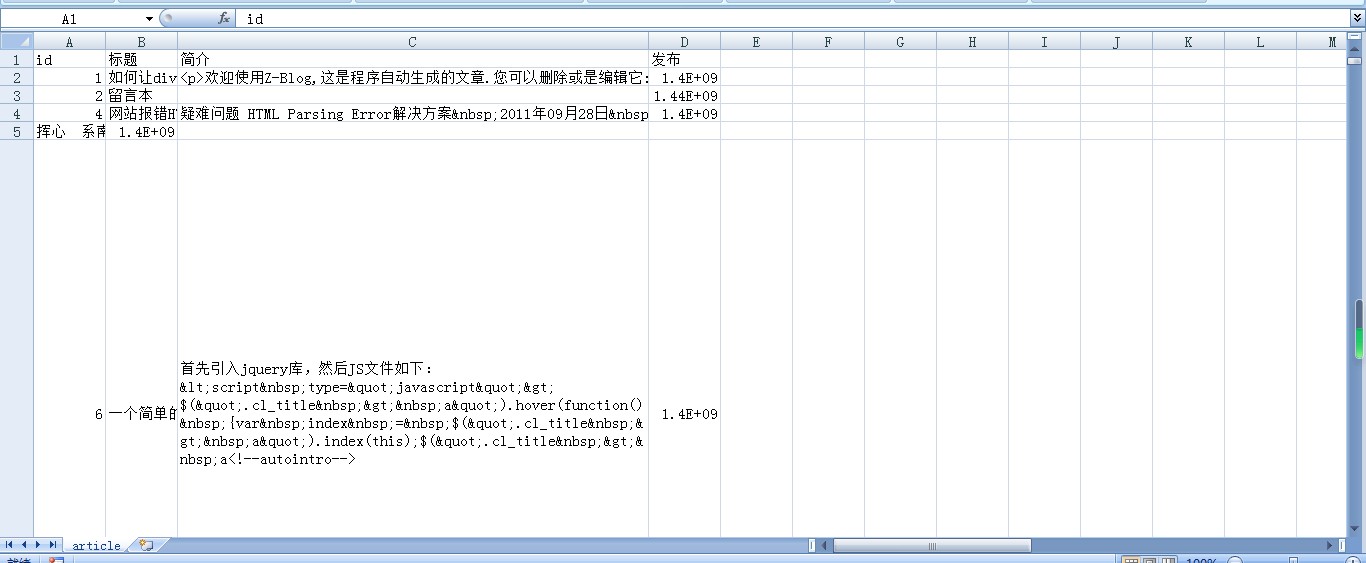

print("写入完成,耗时 %.3fs" % (end_time - start_time))运行结果如下图

我们看到如果没有加锁,我们的文件很有可能会写乱,甚至是无法正常运行程序,很有可能会报IO错误,加锁acquire()以后对我们需要写入的资源进行保护,只有当前进程才能进行写入操作,当写入完毕后我们释放当前锁release(),让下一个进程进行资源修改,这样能够保证公共修改的资源不会乱掉

多线程代码实例:

#!/usr/bin/python3

#coding:utf-8

from threading import Thread,Lock

from queue import Queue

import pymysql

import time

import csv

lock = Lock()

def connectMysql():

conn = False

try:

conn = pymysql.Connect(host='127.0.0.1', port=3306, user='root', passwd='admin', db='sulao', charset='utf8')

except:

print("Connect MySQL failed !")

conn = False

return conn

def getList(sql):

cur = connectMysql().cursor()

cur.execute(sql)

dat = cur.fetchall()

return dat

def writeCsv(q):

while q.qsize() > 0:

d = q.get()

try:

lock.acquire()

with open('article.csv', 'a', newline='') as f:

cvwrite = csv.writer(f)

cvwrite.writerow((d[0], d[1], d[2], d[3]))

except:

print("Write failed !")

finally:

lock.release()

q.task_done()

if __name__ == "__main__":

start_time = time.time()

sql = '''SELECT log_ID,log_Title,log_Intro,log_PostTime FROM zbp_post WHERE log_Status=0 ORDER BY log_ID ASC'''

data = getList(sql)

print("Total %s" % len(data))

with open('article.csv', 'w', newline='') as f:

cvwrite = csv.writer(f)

cvwrite.writerow(['id','标题','简介','发布'])

q = Queue()

for d in data:

q.put(d)

threads = []

for i in range(4):

t = Thread(target=writeCsv, args=(q, ))

threads.append(t)

for t in threads:

t.start()

t.join()

end_time = time.time()

print("写入完成,耗时 %.3fs" % (end_time - start_time))以上就是多进程/多线程锁实例,在实际应用场景中根据自己的需求来选择,以上例子仅供学习使用

内容版权声明:除非注明,否则皆为本站原创文章。

转载注明出处:https://sulao.cn/post/620

评论列表