我们需求是需要在k8s上部署harbor仓库,因为环境基本用于测试,所以就不太想制作证书之类的,就打算基于http方式,最终暴露端口的方式是使用nodeport方式,另外存储没有网络存储,使用local pv的方式绑定到指定节点,所以整个方案就两个字,省事。我们来看看具体部署方法吧。

1.首先安装helm

helm安装可以直接查看这个笔记:https://sulao.cn/post/1022

2.添加harbor仓库,拉取harbor部署包

helm repo add harbor https://helm.goharbor.io

helm pull harbor/harbor --version 1.6.0

tar zxvf harbor-1.6.0.tgz注意,不要拉取最新的的包,我这里拉取过多个版本,相同配置只有这个1.6.0版本才能部署起来,1,6.0 repo,harbor仓库是2.2.0的。

3.修改values.yaml文件

下面列出修改的内容,没有列出的保持和原来一致即可,密码处可以自行修改。

expose:

type: nodePort

tls:

enabled: false

internalTLS:

enabled: false

externalURL: http://10.0.1.184:30002

persistence:

enabled: true

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: ""

storageClass: "harbor-registry-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 100Gi

jobservice:

existingClaim: ""

storageClass: "harbor-jobservice-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

database:

existingClaim: ""

storageClass: "harbor-database-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

redis:

existingClaim: ""

storageClass: "harbor-redis-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

trivy:

existingClaim: ""

storageClass: "harbor-trivy-storage"

subPath: ""

accessMode: ReadWriteOnce

size: 10Gi

chartmuseum:

enabled: false

metrics:

enabled: false4.创建local pv配置并部署

我们首先创建local pv需要的本地存储目录

mkdir -p /data/harbor/{chartmuseum,jobservice,registry,database,redis,trivy}

chmod -R 777 /data/harbor/由于我要将所有存储目录全部存储在一个节点的本地磁盘上,所以使用local pv能够将pv反向绑定到某一个节点上,pod也会根据绑定的pv就只能调度到绑定存储的节点。

host_pv.yaml文件内容如下:

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: registry

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: harbor-registry-storage

local:

path: /data/harbor/registry

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- test-gt-ubuntu22-04-cmd-v1-2-32gb-25m

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jobservice

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: harbor-jobservice-storage

local:

path: /data/harbor/jobservice

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- test-gt-ubuntu22-04-cmd-v1-2-32gb-25m

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: database

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: harbor-database-storage

local:

path: /data/harbor/database

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- test-gt-ubuntu22-04-cmd-v1-2-32gb-25m

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: harbor-redis-storage

local:

path: /data/harbor/redis

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- test-gt-ubuntu22-04-cmd-v1-2-32gb-25m

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: trivy

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: harbor-trivy-storage

local:

path: /data/harbor/trivy

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- test-gt-ubuntu22-04-cmd-v1-2-32gb-25m然后进行部署

kubectl apply -f host_pv.yaml5.harbor部署

helm install harbor harbor/ -n harbor --create-namespace安装过程中会遇到很多镜像拉取不了,自行处理这些镜像后就恢复正常了。

6.修改config.toml配置

在registry那里配置对照如下修改即可。

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."10.0.1.184:30002"]

[plugins."io.containerd.grpc.v1.cri".registry.configs."10.0.1.184:30002".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."10.0.1.184:30002".plain_http]

skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."10.0.1.184:30002"]

endpoint = ["http://10.0.1.184:30002"]7.验证镜像推送和拉取

首先我们登录harbor仓库

nerdctl --insecure-registry login 10.0.1.184:30002#然后输入账户密码Enter Username: admin Enter Password:WARN[0015] skipping verifying HTTPS certs for "10.0.1.184:30002" WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'. Configure a credential helper to remove this warning. See https://docs.docker.com/go/credential-store/ Login Succeeded

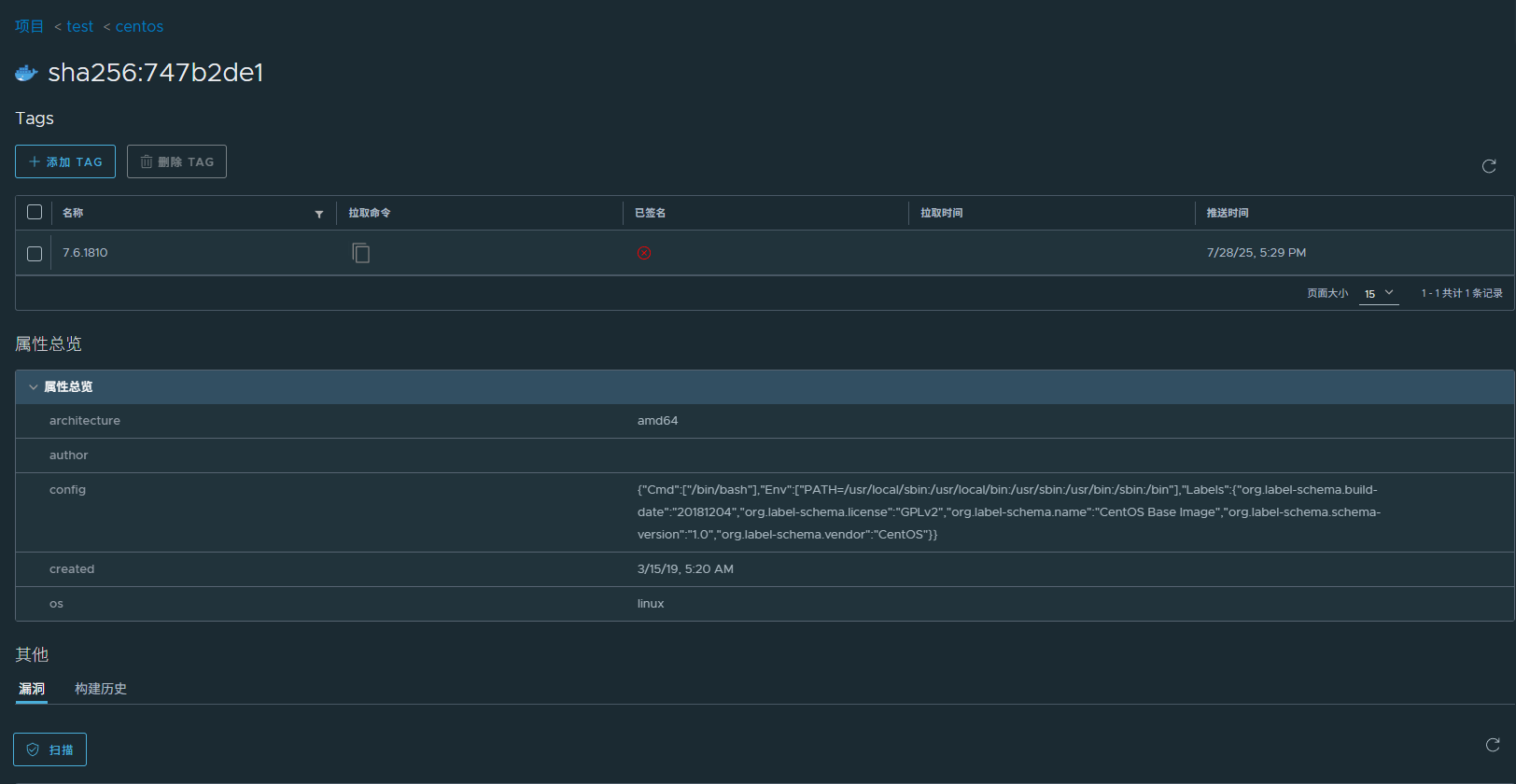

然后改标签推送一个镜像试试

nerdctl -n k8s.io tag swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/centos/centos:7.6.1810 10.0.1.184:30002/test/centos:7.6.1810#推送nerdctl --insecure-registry -n k8s.io push 10.0.1.184:30002/test/centos:7.6.1810#拉取nerdctl --insecure-registry pull 10.0.1.184:30002/test/centos:7.6.1810

内容版权声明:除非注明,否则皆为本站原创文章。

转载注明出处:https://sulao.cn/post/1107

相关阅读

- linux使用国内镜像仓库安装k8s

- k8s更新证书的步骤和影响范围

- k8s单master集群一键安装初始化脚本

- k8s集群部署负载均衡器metallb

- k8s集群部署gpu-operator支持gpu节点自动发现和gpu上报

- k8s节点多网卡下指定某一个ip为节点INTERNAL-IP

- k8s使用SA和Secret配置私有仓库镜像拉取凭证

- k8s使用flannel作为CNI网络插件

- k8s中harbor-database-0日志报Permissions should be u=rwx (0700)的处理方法

- k8s集群部署prometheus/node-exporter/dcgm-exporter

评论列表