集群部署k8s1.23.0版本,容器使用的是containerd1.7.10版本

规划的集群信息如下

192.168.1.72 master1 192.168.1.73 master2 192.168.1.74 master3 192.168.1.75 node1 192.168.1.78 VIP

然后在上述三个master分别部署nginx,keepalive,其中nginx代理后端是三个master,其中nginx代理我们使用16443端口,后端就是默认的k8s的6443端口不动

前期部署啥的这里就不再赘述,可以直接看我之前部署的笔记:https://sulao.cn/post/946.html

这里我们直接从kubeadm init的地方开始

我们首先还是生成init要使用的yaml文件

cd /etc/kubernetes kubeadm config print init-defaults > default.yaml

然后修改配置文件,默认是单master的配置所以必要修改,修改地方下面直接注释出来了

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 0.0.0.0 #修改为0.0.0.0被所有ip访问

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock #修改为containerd的sock文件,如果还是docker这里就不用修改

imagePullPolicy: IfNotPresent

name: master1 #配置文件默认节点不是主机名,替换成主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

certSANs: #添加认证的master节点IP和VIP

- 192.168.1.72

- 192.168.1.73

- 192.168.1.74

- 192.168.1.78

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #修改成国内源

kind: ClusterConfiguration

kubernetesVersion: 1.23.0

controlPlaneEndpoint: 192.168.1.78:16443 #添加管理平面的的代理 VIP+16443

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #增加POD网段

scheduler: {}执行初始化之前我们需要拉起nginx,并且配置keepalive,并绑定VIP到一个节点

我们首先在三个master分别安装nginx、keepalived

yum install epel-release -y yum install nginx nginx-mod-stream keepalived -y

然后配置nginx,需要四层代理的支持,不安装nginx-mod-stream模块的话nginx中的stream模块就会提示不支持,以下配置相关知识点可以查看笔记:https://sulao.cn/post/958.html

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

send_timeout 30;

limit_conn_zone $binary_remote_addr zone=addr:5m;

limit_conn addr 100;

proxy_buffer_size 128k;

proxy_buffers 32 32k;

proxy_busy_buffers_size 128k;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

# include /etc/nginx/conf.d/*.conf;

}

stream {

log_format main '$remote_addr [$time_local] $status $session_time $upstream_addr $upstream_bytes_sent $upstream_bytes_received $upstream_connect_time';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-proxy {

server 192.168.1.72:6443 weight=5 max_fails=2 fail_timeout=300s;

server 192.168.1.73:6443 weight=5 max_fails=2 fail_timeout=300s;

server 192.168.1.74:6443 weight=5 max_fails=2 fail_timeout=300s;

}

server {

listen 16443;

proxy_pass k8s-proxy;

}

}然后设置三个master节点的nginx开机启动

systemctl enable nginx systemctl restart nginx

配置好以后先绑定VIP到其中一台master节点,我们登录任意一台master节点进行操作

ip addr add 192.168.1.78/24 dev eth0 #eth0是我本地网卡名,根据自己情况进行修改

keepalived先要不要安装启动,我们后面集群部署完再最后部署keepalived。

此时前面的步骤就已经完成,接着我们可以开始初始化k8s集群了

kubeadm init --config=default.yaml

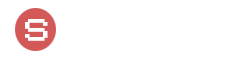

初始化完成以后记录token等信息。

#管理平面join命令 kubeadm join 192.168.1.78:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:d911c095fc9d7419608bd5a7520410589b2b87443f8b6739808cda3a385f197d \ --control-plane #worker节点join命令 kubeadm join 192.168.1.78:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:d911c095fc9d7419608bd5a7520410589b2b87443f8b6739808cda3a385f197d

上述join命令如果是在1.24.0以下的k8s版本还需要添加--cri-socket参数

--cri-socket=unix:///var/run/containerd/containerd.sock

在扩容其他master节点的时候有以下操作,登录待join的master节点

mkdir -p /etc/kubernetes/pki/etcd

拷贝刚才已经初始化好的master节点/etc/kubernetes/pki目录下的证书文件到待join的master节点的对应目录

/etc/kubernetes/pki/ca.crt /etc/kubernetes/pki/ca.key /etc/kubernetes/pki/sa.key /etc/kubernetes/pki/sa.pub /etc/kubernetes/pki/front-proxy-ca.crt /etc/kubernetes/pki/front-proxy-ca.key /etc/kubernetes/pki/etcd/ca.crt /etc/kubernetes/pki/etcd/ca.key

接着在原有生成的join命令上进行修改,主要添加--control-plane参数,如果是1.24.0以下的k8s版本还需要添加--cri-socket参数,以master身份加入原有集群。

待所有master节点join进集群以后,我们开始配置keepalived,keepalived我们使用单播非抢占模式进行配置,避免VIP频繁在优先级高的网络不稳定keepalived节点和其他节点来回漂移造成的连接卡顿或者超时问题,所以要用非抢占模式。

修改三个master节点的/etc/keepalived/keepalived.conf文件

global_defs {

router_id R1

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/etc/keepalived/check.sh"

interval 3

}

vrrp_instance VI_1 {

state BACKUP #另外两个master节点也填写BACKUP

interface eth0 #根据每个节点的网卡名字进行修改

virtual_router_id 51 #主从填写一致 VRID

nopreempt

priority 100 #优先级,其他的backup要小于这个值

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 192.168.1.72 #填写本机IP

unicast_peer {

192.168.1.73 #另外的keepalived节点IP,如果有多个keepalived就写多行,我这里有三个keeplived节点

192.168.1.74

}

virtual_ipaddress {

192.168.1.78/24 #VIP

}

track_script {

chk_nginx #调用检测脚本

}

}然后是检测的check.sh脚本,也放置于/etc/keepalived/目录下

#!/bin/bash if [ "$(ps -ef | grep "nginx: master process"| grep -v grep )" == "" ] then systemctl restart nginx sleep 2 if [ "$(ps -ef | grep "nginx: master process"| grep -v grep )" == "" ] then pkill -f keepalived fi fi

最后启动三个naster节点keepalived

systemctl enable keepalived systemctl start keepalived

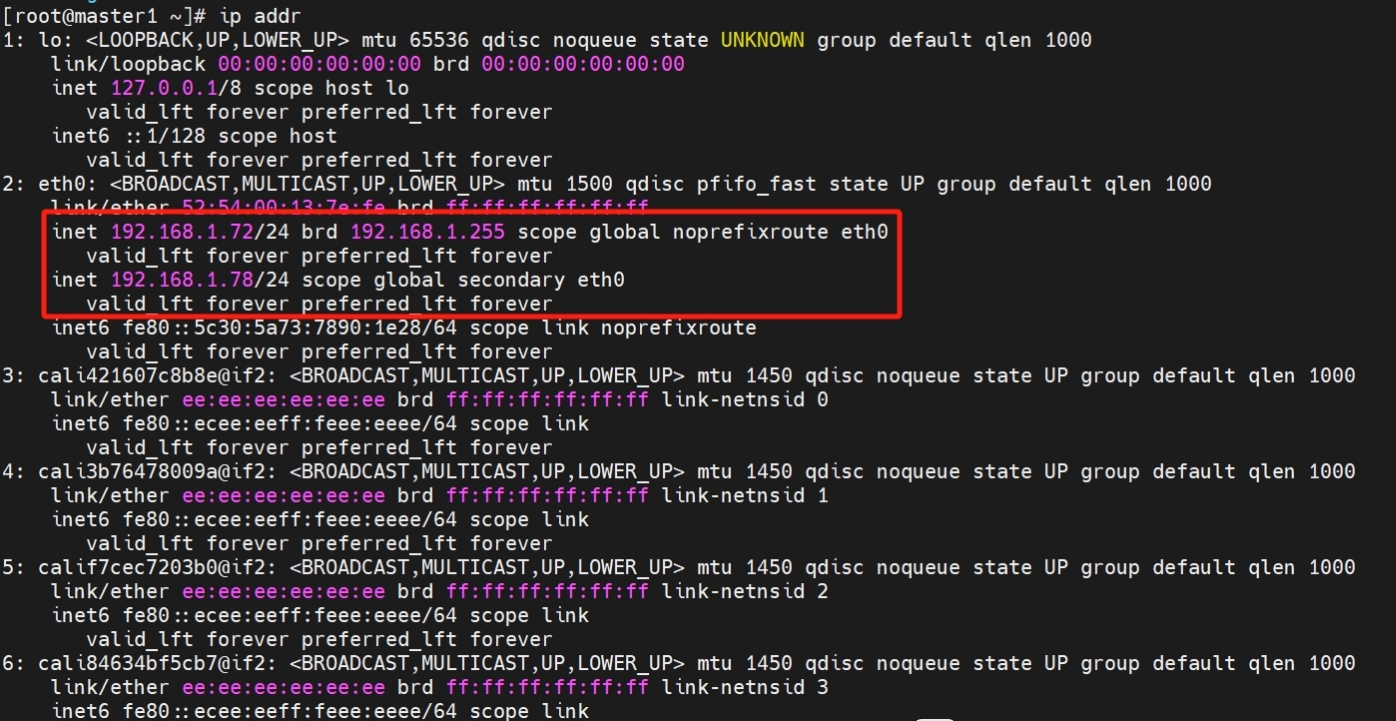

然后我们可以进行测试,先在master1(192.168.1.72)上查看下VIP已经绑定在eth0网卡上

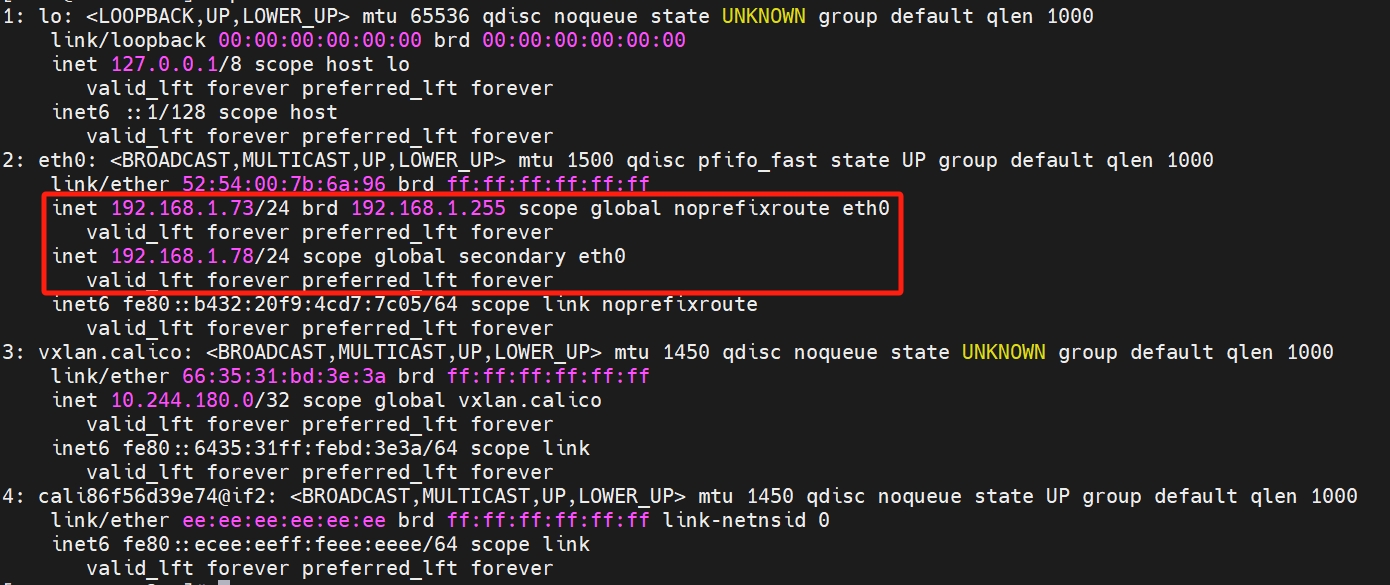

然后我们master1关机,然后去master2上执行ip addr命令查看

发现VIP已经绑定到master2(192.168.1.73)上了,这时k8s集群操作仍然正常。