k8s已经支持GPU设备的调度和POD容器挂载,需要做以下配置

1.首先我们需要再宿主机安装驱动

2.需要安装nvidia-container-runtime

3.GPU节点打标签并部署k8s-device-plugin

上面1和2可以直接搜我之前的笔记,我们直接部署k8s-device-plugin,这个可以上报GPU节点的GPU数量到k8s中,然后在业务yaml文件中添加requests/limits请求配置中添加GPU相关配置即可自动调度到GPU节点并挂载GPU设备

将以下内容保存为k8s-device-plugin.yaml,lable需要自己按照需求进行修改

cat k8s-device-plugin.yaml

apiVersion: apps/v1 kind: DaemonSet metadata: name: nvidia-device-plugin-daemonset namespace: kube-system spec: selector: matchLabels: name: nvidia-device-plugin-ds updateStrategy: type: RollingUpdate template: metadata: labels: name: nvidia-device-plugin-ds spec: tolerations: - key: nvidia.com/gpu operator: Exists effect: NoSchedule nodeSeletor: nodetype: gpu priorityClassName: "system-node-critical" containers: - image: nvcr.io/nvidia/k8s-device-plugin:v0.13.0 name: nvidia-device-plugin-ctr env: - name: FAIL_ON_INIT_ERROR value: "false" securityContext: allowPrivilegeEscalation: false capabilities: drop: ["ALL"] volumeMounts: - name: device-plugin mountPath: /var/lib/kubelet/device-plugins volumes: - name: device-plugin hostPath: path: /var/lib/kubelet/device-plugins

然后apply进行部署

kubectl apply -f k8s-device-plugin.yaml

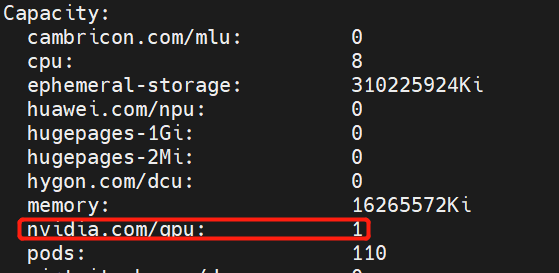

部署完以后我们可以describe gpu节点可以看到注册到k8s里面的GPU信息

然后在业务的yaml中添加以下配置即可

resources: requests: nvidia.com/gpu: 1 limits: nvidia.com/gpu: 1