自己在测试环境中尝试编写一些简单的日志规则,于是拿mongodb的日志进行测试,整体的ELK环境这里不再讲了,如果不熟悉可以查看我之前的笔记:https://sulao.cn/category-14_2.html,主要从客户端的filebeat开始

我们去mongodb服务器上安装filebeat

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.4.0-x86_64.rpm yum localinstall filebeat-7.4.0-x86_64.rpm

然后我们去配置filebeat

#cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/local/mongodb/logs/mongodb.log

tags: ["mongo-logs"]

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

#output.elasticsearch:

# hosts: ["localhost:9200"]

output.logstash:

hosts: ["122.51.230.106:5044"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~接着我们需要去logstash里面去配置过略规则

input {

beats {

port => 5044

codec => plain {

charset => "UTF-8"

}

}

}

filter {

if "mongo-logs" in [tags] {

grok {

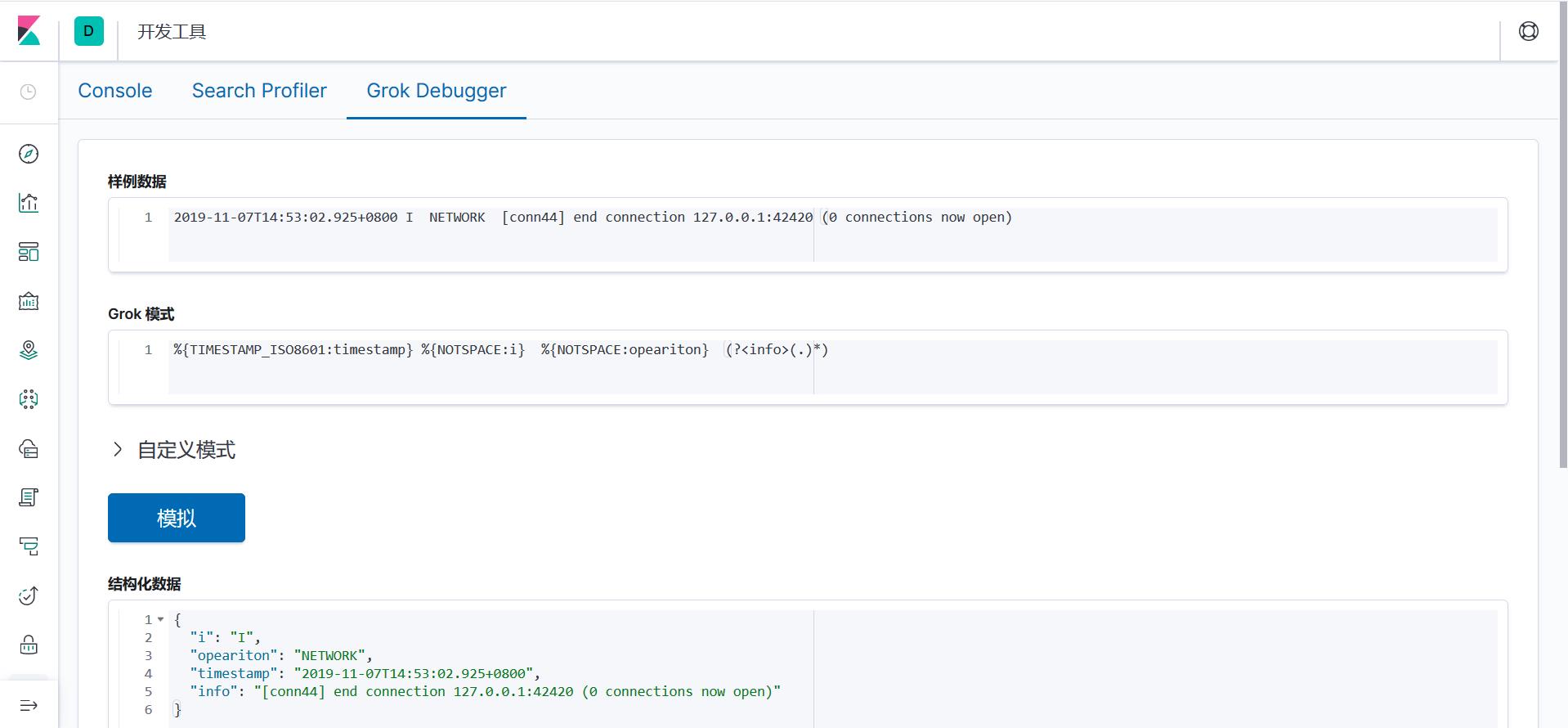

match => ["message", '%{TIMESTAMP_ISO8601:timestamp} %{NOTSPACE:i} %{NOTSPACE:opeariton} (?<info>(.)*)']

}

mutate {

remove_field => ["message"]

remove_field => ["i"]

}

}

if "beats_input_codec_plain_applied" in [tags] {

mutate {

remove_tag => ["beats_input_codec_plain_applied"]

}

}

}

output {

if "mongo-logs" in [tags] {

elasticsearch {

hosts => "122.51.230.106:9200"

manage_template => false

index => "mongo-%{+YYYY.MM.dd}"

#document_type => "%{[@metadata][type]}"

}

}

}grok的规则我们可以在kibana上可以边测试边写,在开发工具Grok Debugger内

添加索引这里也不在赘述了,最后我们在kibana上查看下收集的的mongodb的日志